How AI is Secretly Flattening Your World

Sreekant Sreedharan, an accomplished entrepreneur, explores the roots of artificial intelligence and its many facets.

Picture yourself sitting in a first-grade English language test, faced with a seemingly straightforward question:

“The colour of a crow is ___.”

As you attempt to provide an answer, your mind intuitively breaks down the sentence into its constituent concepts, rearranging and restructuring them into a coherent sentence until you arrive subconsciously at the solution:

“If a crow is a blackbird, what colour is the bird?”

The correct answer, of course, is “black.”

This process of breaking down language into its fundamental concepts and restructuring it into a coherent response by applying statistics and logic is precisely what AI chatbots like ChatGPT are designed to do.

These systems are powered by vast networks of interconnected computing units called neurons, which are trained on massive datasets culled from the Internet – everything from social media feeds and news sites to encyclopedias and blogs – all in service of decoding and answering questions with responses that are deceptively human-like.

Unlike the cells in the human brain, however, these neural networks are not organic but rather machines operating statistical algorithms designed to mimic the behavior of the human mind through computer interactions that are both detached and strikingly intelligent and natural. At the forefront of this rapidly advancing technology is a new form of artificial intelligence known as Generative AI, which promises to revolutionize every industry where human ingenuity was once the sole driver of innovation. Experts in the field now predict that the impact of this technology will be as profound as the invention of the steam engine or the radio, with an unparalleled potential for disruptive economic displacement.

This is the first in a series of articles to explore the hundred-year history of computing technology and how it has brought us to this critical juncture in history. In subsequent installments, we will delve into how a series of interconnected global events are fundamentally reshaping how we work, learn, and socialize in a connected world that is changing at an unprecedented pace.

Rise of Computational Machines (1900-2000)

The roots of modern computing can be traced back to ancient Chinese, Greek, and Indian philosophical works on mathematical logic, but perhaps the most comprehensive treatment of logic during this period may be found in Aristotle’s collected works on logic, the Organon. Yet it took humanity another two millennia to put ancient theory into practice, and it was not until Charles Babbage invented the first mechanical computer, called the Differential Engine, in the 1820s that theoretical concepts of mathematical logic finally became a reality. An accomplice to Babbage in his work was an extraordinary woman – Lady Ada Lovelace, who, in an extraordinary leap of ingenuity, was the first to recognize that the mechanical computer had applications beyond pure calculation. She published the first algorithm intended to be carried out by such a machine, marking a significant milestone in computing history.

Despite the foundational pieces being already in place for the emergence of the modern computer in the early 19th century, it would take more than a century for the first digital computers powered by electricity to appear. This was made possible by the invention of electronic switching devices known as vacuum tubes. This epochal period is now regarded as the birth of modern computing.

The birth of modern computing.

In the next two decades, a team of inventors led by William Shockley created the semiconductor and ushered in the era of semiconductor technology. It was a revolutionary breakthrough that would change the course of history. But it was not until September 12th, 1958, that the next big leap occurred – Jack Kilby, working on a pet project during his vacation, invented the first integrated circuit out of a germanium substrate. This development was quickly improved six months later by Robert Noyce, who recognized the limitations of germanium and fashioned his own chip out of silicon.

The age of the microchip had arrived.

How our relationship with computers has evolved over 100 years.

With the creation of the microchip, an entire industry was born, with Silicon Valley emerging as the Mecca of modern computing technology. This singular technological breakthrough paved the way for the development of computers, smartphones, and many other digital devices that have become integral to our lives.

Rise of the Microchip (1960-2000)

The invention of the integrated circuit was a watershed moment in human history, yet few could have predicted how it would transform the world in unfathomable ways.

The microchip, also known as the semiconductor chip, is a small electronic component that contains complex circuits and transistors that can process and store information. It is a key element of the integrated circuit, a revolutionary technology transforming how we design and build electronic devices.

The integrated circuit, or IC, is a miniaturized electronic circuit consisting of many transistors, resistors, and capacitors embedded on a single chip of semiconductor material, usually silicon. This enables the circuit to perform multiple functions on a tiny piece of hardware, making it faster, smaller, and more reliable than traditional electronic circuits that use discrete components.

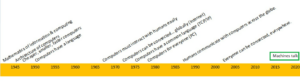

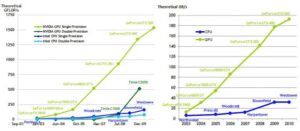

How rapid advances in computing power have shaped the computing industry. (Source: ResearchGate)

The microchip is at the heart of the integrated circuit, providing the means to store and process data on a small scale. The development of the integrated circuit paved the way for the digital revolution we experience today, allowing for the creation of computers, smartphones, and other digital devices we use daily. Today, the microchip continues to drive innovation and technology, powering everything from medical devices to spacecraft. But the advances in computer networks, a technology used to interconnect computers, led to the next important innovation – the Internet.

Birth of the Internet (1960-2000)

The Internet, a ubiquitous global network of interconnected computers and servers, has revolutionized how we communicate, work, learn, and entertain ourselves. Yet, it is hard to imagine a world without the Internet, but it is a relatively recent phenomenon that has its origins in the Cold War era.

The Internet’s story begins in the 1960s, during the height of the Cold War when the U.S. Department of Defense sought to create a communication system that could withstand a nuclear attack. The Advanced Research Projects Agency Network (ARPANET) was developed in response to this challenge. This ground-breaking network was the first to use packet switching, a technique that divides data into small packets sent independently across the network and then reassembled at their destination.

ARPANET was initially intended for military and academic purposes, but it quickly evolved into a means of communication for the broader public. The first email was sent in 1971, and by the 1980s, the use of the Internet had expanded to include commercial and personal use. The introduction of the World Wide Web in 1991 further accelerated the Internet’s growth, making it more accessible and user-friendly.

Today, the Internet spans a vast interconnected network of millions of computers and servers spanning the globe. It has revolutionized how we live, work, and communicate, making it possible to connect with people and access information from anywhere in the world. It is an integral part of our daily lives, connecting people and communities across the globe.

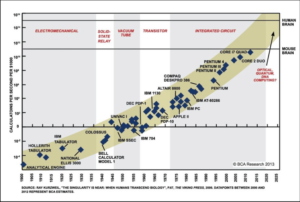

How microprocessors have steadily kept up with Moore’s Law over 50 years.(Source: Markets Insider)

The modern computing industry has been evolving at a breathtaking speed for the past 50 years. But, powering this rapid rise of the Desktop Computer, the Internet, and the Smart Phone is an esoteric economic principle – Moore’s Law. Coined as a prediction made by Intel co-founder Gordon Moore in 1965, it stated that the number of transistors that can be placed on a single integrated circuit would double every 18 to 24 months. This prediction has held true for several decades and has become a driving force behind the rapid progress and innovation in the technology industry.

The doubling of transistors on microchips significantly increases computing power and performance while reducing the cost and size of electronic devices. This has led to the development of increasingly powerful and sophisticated computers, smartphones, and other digital devices that have revolutionized how we live, work, and communicate.

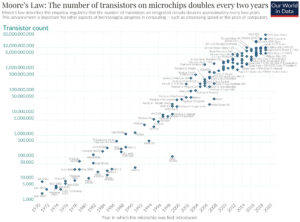

How microprocessors called GPUs have outpaced the performance of conventional chips in a PC.

Moore’s Law has also profoundly impacted the semiconductor industry, driving innovation and competition among chip manufacturers to create smaller, faster, and more efficient microchips. It has been the driving force behind the creation of new technologies, such as artificial intelligence, cloud computing, and the Internet of Things, which have further transformed the way we interact with technology. A fundamental principle governing the evolution of the technology industry, Moore’s Law continues to inspire new breakthroughs in computing and electronics.

By the turn of the century, the desktop computer and the Internet had permeated many aspects of everyday life, and within a decade, BlackBerry and smartphones would make mobile access to computing pervasive and literally within the palm of our hands. Commercially computers of the period had crossed a critical threshold in computing capacity and speed – they were now proving to be very capable of running early experimental artificial intelligence (AI) models. We are on the cusp of a revival in research focused on artificial intelligence.

Rise of Semantic Machines (2000-)

The concept of artificial intelligence (AI) dates back to the 1920s when mathematician and philosopher Norbert Wiener developed a theory of cybernetics, the study of systems and control in machines and animals. However, it wasn’t until the mid-20th century that significant progress was made in AI. In 1950, British mathematician and computer scientist Alan Turing proposed a test to determine whether a machine could demonstrate intelligent behavior indistinguishable from a human’s. This led to the development of early AI programs that could play games like chess and checkers and even make simple mathematical calculations.

During the 1960s and 1970s, AI research received significant funding from the US government, focusing on developing systems capable of natural language processing and decision-making.

However, progress in AI was slow and by the 1980s, the field had experienced a period of stagnation known as the “AI winter”. This was due in part to unrealistic expectations and overhype, as well as a need for more computational power and data. Despite this setback, advances in machine learning and neural networks in the 1990s paved the way for a resurgence in AI research and the development of new applications such as image and speech recognition. By the turn of the century, the stage was set for a new era of AI innovation that would transform industries from healthcare to finance to transportation.

The start of the new millennium saw a rapid acceleration of interest and investment in artificial intelligence. The emergence of vast amounts of data, coupled with the development of more powerful computing systems and the refinement of algorithms, created an ideal environment for AI to thrive.

In the mid-2000s, the world witnessed the rise of “big data,” which refers to the massive amounts of data generated daily. This explosion of data presented both an opportunity and a challenge for AI developers. On the one hand, it provided abundant information that could be used to train AI models and improve their accuracy. However, on the other hand, it created a need for new tools and techniques to manage and analyze this data. In response, new technologies, such as Hadoop and Spark, were developed, allowing developers to process and analyze vast amounts of data in a distributed manner. These tools became the foundation of the modern data ecosystem and enabled AI to make significant strides in accuracy and performance.

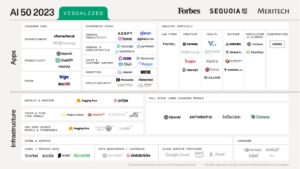

The emerging ecosystem of companies dominating AI (Source: Sequoia Capital)

By 2010, AI had tacitly become integral to many aspects of modern life, from virtual personal assistants on smartphones to advanced medical diagnosis systems. The advent of deep learning in the early 2010s marked a new phase in AI development, creating neural networks capable of learning and improving on their own. This breakthrough paved the way for significant advances in natural language processing, image recognition, and robotics, among other areas.

A combination of technological progress, increased data availability, and investment by both public and private organizations fueled the rapid rise of AI during this period. Underpinning this new resurgence of AI is the emergence of new forms of computer models inspired by the structure and function of the human brain and premised on the idea that the connection between neurons changes, and that’s how the brain learns. Neural networks consist of layers of interconnected processing nodes, each of which receives inputs, performs computations, and passes on the outputs to other nodes in the network, enabling them to learn from data and perform complex tasks.

These computer models are the engines powering some of every powerful Generative AI technology we currently use, including ChatGPT, Dall-E, and MidJourney. Such advances in AI are possible by the immense computing capabilities made possible by a distributed network of supercomputers, which has held true for nearly a decade driven by a new law of economics powering the AI economy – the number of neurons in the largest AI systems double (growing 120% CAGR) every 12 months, far outpacing the Moore’s Law. At this staggering rate of growth, ChatGPT-3, the most advanced system circa 2020, had just over half the 86 billion neurons in a human brain and thus consumed as much energy (1.2MWh) as a small town of 175,000 people in its training phase. (Performance metrics on scale and energy demands of the latest AI machines are still unpublished, but it is fair to assume that their metrics will only be exponentially larger.)

| Launch | AI Platform & Technology | Organization | Neurons (million) | Parameters (billion) | |

| 1997 | LSTM | S. Hochreiter and J. Schmidhuber | 0.001 | ||

| 1980 | SOM | Teuvo Kohonen | 0.001 | ||

| 1998 | LeNet-5 | Yann LeCun | 0.06 | ||

| 1999 | HMAX | M. Riesenhuber and T. Poggio | 1 | ||

| 2001 | ESN | Herbert Jaeger | 1 | ||

| 2010 | DBN | 10 | |||

| 2012 | AlexNet | 60 | |||

| 2014 | VGGNet-16 | Oxford University | 138 | ||

| 2014 | GoogleNet (Inception-v1) | 6 | |||

| 2015 | DQN | Google DeepMind | 0.52 | ||

| 2015 | GoogleNet (Inception-v2) | 11.2 | |||

| 2015 | GoogleNet (Inception-v2) | 23.8 | |||

| 2016 | GoogleNet (Inception-v4) | 41.3 | |||

| 2016 | Resnet | Microsoft | 60 | ||

| 2016 | ResNext | 60 | |||

| 2016 | VGG-19 | Oxford University | 143 | ||

| 2016 | Xception | François Chollet | 22.9 | ||

| 2017 | BERT | 110 | |||

| 2018 | DigitalReasoning | Google (experimental) | 40000 | 160 | |

| 2018 | BERT Large | 85 | 0.034 | ||

| 2018 | GPT-2 | OpenAI | 375 | 1.5 | |

| 2018 | Megatron-Turing | Microsoft/Nvidia (experimental) | 132500 | 530 | |

| 2018 | Wu Dao 2.0 | BAAI | 437.5 | 1750 | |

| 2019 | Megatron LM | Nvidia | 2100 | 8.3 | |

| 2019 | T5 | 2750 | 11 | ||

| 2019 | Lab2D | Deepmind (experimental) | 250 | 1 | |

| 2020 | Meena | Google (experimental) | 650 | 2.6 | |

| 2020 | GPT-3 | OpenAI | 43750 | 175 |

Looking back retrospectively, the 2000s witnessed a significant increase in the development of machine learning, with the creation of advanced algorithms such as support vector machines and decision trees. With minimal human intervention, these algorithms enabled computers to learn and improve their performance over time, paving the way for the advanced intelligence systems we use today. Consequently, over the past two decades, faster and more capable AI applications have emerged and transformed various industries, including finance, healthcare, the military, industrial automation, and transportation.

Conclusion

Today, computing is an integral part of our lives, powering everything from smartphones to complex business operations. As we reflect on the remarkable journey that brought us to this point, we can see how theoretical musings on mathematical logic that began over two millenia ago have have transformed into an indispensable aspect of modern life. Yet, it is certain that the future is now, and we must adapt or risk being left behind in a world where advanced artificial intelligence opens new possibilities to enrich modern life as we continue to push the boundaries of what is possible with technology.

In the next part of this series, we will explore how this new technology transforms industries while market and geopolitical events shape modern life in the Age of AI.

(Sreekant Sreedharan is an accomplished entrepreneur and computer scientist passionate about investing in cutting-edge technologies. He works as an investment advisor to the CAIF, where he helps identify promising startups and emerging technologies focused on addressing climate action. Views and opinions expressed in this program are those of the author and do not necessarily reflect the views or positions of any entities he represents)_